- Aws Cli Download From S3 To Computer

- Aws Cli For S3

- Aws Cli Download File From S3

- Aws Cli Download From S3 Edge

- Aws S3 Download Folder

- Aws Cli Download All Files From S3 Bucket

What is causing Access Denied when using the aws cli to download from Amazon S3? Ask Question 51. I'm really flailing around in AWS trying to figure out what I'm missing here. I'd like to make it so that an IAM user can download files from an S3 bucket - without just making the files totally public - but I'm getting access denied. Configure Amazon S3 using AWS CLI. In this step you will learn, how to create S3 Bucket, how to upload file from local to S3 bucket, how to download a file from S3 bucket and how to delete a. AWS CLI Server-Side Encryption in S3 Buckets Mon 19 October 2015 I recall trying a couple of different times to check if an S3 bucket had server-side encryption enabled, as well as how to encrypt an already existing bucket that doesn't have encryption enabled.

I noticed that there doesn't seem to be an option to download an entire S3 bucket from the AWS Management Console.

Jan 31, 2018 - The other day I needed to download the contents of a large S3 folder. That is a tedious task in the browser: log into the AWS console, find the. 2 thoughts on “How to Copy local files to S3 with AWS CLI” Benji April 26, 2018 at 10:28 am. What protocol is used when copying from local to an S3 bucket when using AWS CLI?

Is there an easy way to grab everything in one of my buckets? I was thinking about making the root folder public, using wget to grab it all, and then making it private again but I don't know if there's an easier way.

27 Answers

AWS CLI

AWS have recently released their Command Line Tools. This works much like boto and can be installed using sudo easy_install awscli or sudo pip install awscli

Once installed, you can then simply run:

Command:

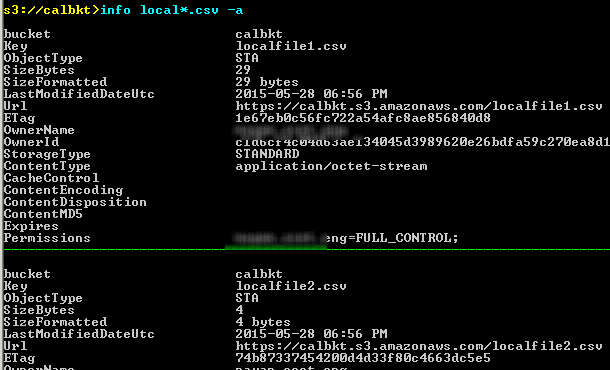

Output:

This will download all of your files (one-way sync). It will not delete any existing files in your current directory (unless you specify --delete), and it won't change or delete any files on S3.

You can also do S3 bucket to S3 bucket, or local to S3 bucket sync.

Check out the documentation and other examples:

Downloading A Folder from a Bucket

Whereas the above example is how to download a full bucket, you can also download a folder recurively by performing

This will instruct the CLI to download all files and folder keys recursively within the PATH/TO/FOLDER directory within the BUCKETNAME bucket.

You can use s3cmd to download your bucket.

Update

There is another tool you can use called Rclone. Below is a code sample in the Rclone documentation.

I've used a few different methods to copy Amazon S3 data to a local machine, including s3cmd, and by far the easiest is Cyberduck. All you need to do is enter your Amazon credentials and use the simple interface to download / upload / sync any of your buckets / folders / files.

You've basically many options to do that, but the best one is using AWS CLI

Here's a walkthrough

Aws Cli Download From S3 To Computer

- Step 1

Download and install AWS CLI in your machine

- Step 2

Configure AWS CLI

Make sure you input valid access key and secret key which you received when you created the account

- Step 3

Sync s3 bucket with following command

Replace above command with following data

yourbucket >> your s3 bucket that you want to download

/local/path >> path in your local system where you want to download all the files

Hope this helps!

To Download using AWS S3 CLI :

To Download using Code, Use AWS SDK .

To Download using GUI, Use Cyberduck .

Hope it Helps.. :)

S3 Browser is the easiest way I have found. It is excellent software... And it is free for non commercial use. Windows only.

If you use Visual Studio, download http://aws.amazon.com/visualstudio/

Aws Cli For S3

After installed, go to Visual Studio - AWS Explorer - S3 - Your bucket - Double click

In the window you will be able to select all files. Right click and download files.

Another option that could help some osx users, is transmit. It's an ftp program that also let you connect to your s3 files. And it has an option to mount any ftp or s3 storage as folder in finder. But it's only for a limited time.

I've done a bit of development for s3 and I have not found a simple way to download a whole bucket.If you want to code in Java the jets3t lib is easy to use to create a list of buckets and iterate over that list to download them.

first get a public private key set from the AWS management consule so you can create an S3service object...

then get an array of your buckets objects...

finally, iterate over that array to download the objects one at a time with this code...

I put the connection code in a threadsafe singleton. The necessary try/catch syntax has been omitted for obvious reasons.

If you'd rather code in Python you could use Boto instead.

PS after looking around BucketExplorer my do what you want.https://forums.aws.amazon.com/thread.jspa?messageID=248429

Answer by @Layke is good, but if you have a ton of data and don't want to wait forever, you should pay close attention to this documentation on how to get the AWS S3 CLI sync command to synchronize buckets with massive parallelization. The following commands will tell the AWS CLI to use 1,000 threads to execute jobs (each a small file or one part of a multipart copy) and look ahead 100,000 jobs:

After running these, you can use the simple sync command as follows:

or

On a system with CPU 4 cores and 16GB RAM, for cases like mine (3-50GB files) the sync/copy speed went from about 9.5MiB/s to 700+MiB/s, a speed increase of 70x over the default configuration.

If you use Firefox with S3Fox, that DOES let you select all files (shift-select first and last) and rightclick and download all... I've done it with 500+ files w/o problem

When in Windows, my preferred GUI tool for this is Cloudberry Explorer for S3., http://www.cloudberrylab.com/free-amazon-s3-explorer-cloudfront-IAM.aspx. Has a fairly polished file explorer, ftp-like interface.

You can do this with https://github.com/minio/mc :

mc also supports sessions, resumable downloads, uploads and many more. mc supports Linux, OS X and Windows operating systems. Written in Golang and released under Apache Version 2.0.

If you have only files there (no subdirectories) a quick solution is to select all the files (click on the first, Shift+click on the last) and hit Enter or right click and select Open. For most of the data files this will download them straight to your computer.

AWS sdk API will only best option for upload entire folder and repo to s3 and download entire bucket of s3 to locally.

For uploading whole folder to s3

for download whole s3 bucket locally

you can also assign path As like BucketName/Path for particular folder in s3 to download

To add another GUI option, we use WinSCP's S3 functionality. It's very easy to connect, only requiring your access key and secret key in the UI. You can then browse and download whatever files you require from any accessible buckets, including recursive downloads of nested folders.

Since it can be a challenge to clear new software through security and WinSCP is fairly prevalent, it can be really beneficial to just use it rather than try to install a more specialized utility.

Windows User need to download S3EXPLORER from this link which also has installation instructions :- http://s3browser.com/download.aspx

Then provide you AWS credentials like secretkey, accesskey and region to the s3explorer, this link contains configuration instruction for s3explorer:Copy Paste Link in brower: s3browser.com/s3browser-first-run.aspx

Now your all s3 buckets would be visible on left panel of s3explorer.

Simply select the bucket, and click on Buckets menu on top left corner, then select Download all files to option from the menu. Below is the screenshot for the same:

Then browse a folder to download the bucket at a particular place

Click on OK and your download would begin.

aws sync is the perfect solution. It does not do a two way.. it is a one way from source to destination. Also, if you have lots of items in bucket it will be a good idea to create s3 endpoint first so that download happens faster (because download does not happen via internet but via intranet) and no charges

Here is some stuff to download all buckets, list them, list their contents.

/----------------------------Extension Methods-------------------------------------/

}

As Neel Bhaat has explained in this blog, there are many different tools that can be used for this purpose. Some are AWS provided, where most are third party tools. All these tools require you to save your AWS account key and secret in the tool itself. Be very cautious when using third party tools, as the credentials you save in might cost you, your entire worth and drop you dead.

Therefore, I always recommend using the AWS CLI for this purpose. You can simply install this from this link. Next, run the following command and save your key, secret values in AWS CLI.

And use the following command to sync your AWS S3 Bucket to your local machine. (The local machine should have AWS CLI installed)

Examples:

1) For AWS S3 to Local Storage

2) From Local Storage to AWS S3

3) From AWS s3 bucket to another bucket

If you only want to download the bucket from AWS, first install the AWS CLI in your machine. In terminal change the directory to where you want to download the files and run this command.

If you also want to sync the both local and s3 directories (in case you added some files in local folder), run this command:

As @layke said, it is the best practice to download the file from the S3 cli it is a safe and secure. But in some cases, people need to use wget to download the file and here is the solution

This will presign will get you temporary public URL which you can use to download content from S3 using the presign_url, in your case using wget or any other download client.

My comment doesn't really add a new solution. As many people here said, aws s3 sync is the best. But nobody pointed out a powerful option: dryrun. This option allows you to see what would be downloaded/uploaded from/to s3 when you are using sync. This is really helpful when you don't want to overwrite content either in your local or in a s3 bucket. This is how is used:

aws s3 sync <source> <destination> --dryrun

I used it all the time before pushing new content to a bucket in order to not upload undesired changes.

Try this command:

aws s3 sync yourBucketnameDirectory yourLocalDirectory

Aws Cli Download File From S3

For example, if your bucket name is myBucket and local directory is c:local, then:

Aws Cli Download From S3 Edge

aws s3 sync s3://myBucket c:local

For more informations about awscli check thisaws cli installation

AWS CLI is the best option to download an entire S3 bucket locally.

Aws S3 Download Folder

Install AWS CLI.

Configure AWS CLI for using default security credentials and default AWS Region.

To download the entire S3 bucket use command

aws s3 sync s3://yourbucketname localpath

Reference to use AWS cli for different AWS services: https://docs.aws.amazon.com/cli/latest/reference/

protected by brasofiloOct 26 '18 at 21:42

Aws Cli Download All Files From S3 Bucket

Thank you for your interest in this question. Because it has attracted low-quality or spam answers that had to be removed, posting an answer now requires 10 reputation on this site (the association bonus does not count).

Would you like to answer one of these unanswered questions instead?